Challenges in Decentralized Storage

In cloud storage, decentralized networks have several advantages over traditional data centers. Transparently replicating data across zones increases data availability and, potentially, performance if pieces can be retrieved closer to their access points. Decentralized storage networks spread information across multiple nodes rather than a centralized location. Distributing work over multiple geographically unique nodes drastically improves fault tolerance since no single point of failure can bring down the system. However, it also drastically increases the system’s complexity as nodes must stay in sync, and data must be replicated across multiple zones.

Requirements for a decentralized storage network:

1. Permissionless access, no centralized decision-making

2. Fast data uploads and retrieval

3. Privacy and security

When considering the decentralization of storage networks, incentives play a vital role in the network’s dynamics. In an untrusted environment, incentives must align with desired behavior. Focusing primarily on the storage supply side, we want to incentivize a few key behaviors.

Good Behavior:

1. Providers should be online

2. Providers should accept new data

3. Providers should provide the data they are storing when the user requests it

4. Providers should securely store the data for the agreed-upon time period

Bad Behavior:

1. Providers go offline

2. Providers refuse data

3. Providers hold data hostage or refuse to serve it

4. Providers delete data that they are paid to store

How do we incentivize providers to store and serve actual user

data?

A network may provide subsidies to its storage providers through a consensus mechanism, like a block reward or a fixed amount provided by a centralized company. A subsidy can cause fast growth of a decentralized storage network’s capacity since hosts are incentivized to provide storage with no demand. A high block reward or other incentive for “empty storage” reduces the need for storage providers to accept data, as the subsidy alone covers the costs of operating the hardware. If it’s high enough, providers may be actively discouraged from storing or serving useful data. Host subsidization can ensure stability in an early network; however, it’s a careful balancing act. Providers are no longer incentivized to participate in the storage market if the subsidy is too high. If the subsidy isn’t maintained or has diminishing returns as capacity grows, hosts may exit the network, which puts user data at risk. Alternatively, market-driven hosting incentives operate in harmony with the principles of supply and demand. In these markets, hosts receive economic incentives directly tied to the amount of storage they provide and receive payment based on the demand for their services. As user demand for storage rises, revenue naturally increases, driving the incentive for hosts to expand their capacity and contribute to a responsive, self-sustaining hosting ecosystem.

The symbiotic relationship helps foster network resilience and encourages network decentralization. However, providers may reduce their capacity or exit the network if demand is low. In this case, user data is unlikely to be at risk since demand is nonexistent. Market-driven incentives make it more difficult to bootstrap a fledgling network, but they are healthier long-term.

Sia provides an open market where storage providers compete for consumer demand on price and performance. If a provider has a faster connection and charges more, consumers may find that appealing and still choose that host. Alternatively, consumers may be fine with slower nodes, provided their costs are lower. These open market interactions ensure costs remain competitive and fair, and providers are compensated only for their services.

How do we get to 11 “9’s” of durability?

In a traditional cloud storage network, service agreements guarantee data availability. These are usually represented as the number of “9’s” after the decimal. For example, Amazon’s S3 and Cloudflare both claim 99.999999999% (11 nines) of data durability. Cloudflare and Amazon have suffered numerous outages that took down large portions of the web in recent years. Alternatively, distributed infrastructure can prevent these outages by having built-in redundancy. Instead of being tied to a single geographical zone or data center, nodes are spread across the globe, providing automatic geographical redundancy and fault tolerance. However, since providers are inherently untrusted, it must be assumed that they can go offline at any time.

Decentralized networks must also repair data as nodes go offline to ensure high availability. On Sia, this happens transparently to the user, but users must pay for data that needs to be repaired. Managing the expansion factor of user data and provider churn is vital because it helps lower costs and keeps them more predictable.

Luckily, we can estimate the durability of data when using different uploading strategies, such as replication or erasure codes. The average node failure rate on Sia is a little over 10%. If we assume that, on average, 20% of nodes will fail, we can predict the durability of data over the lifetime of a storage contract.

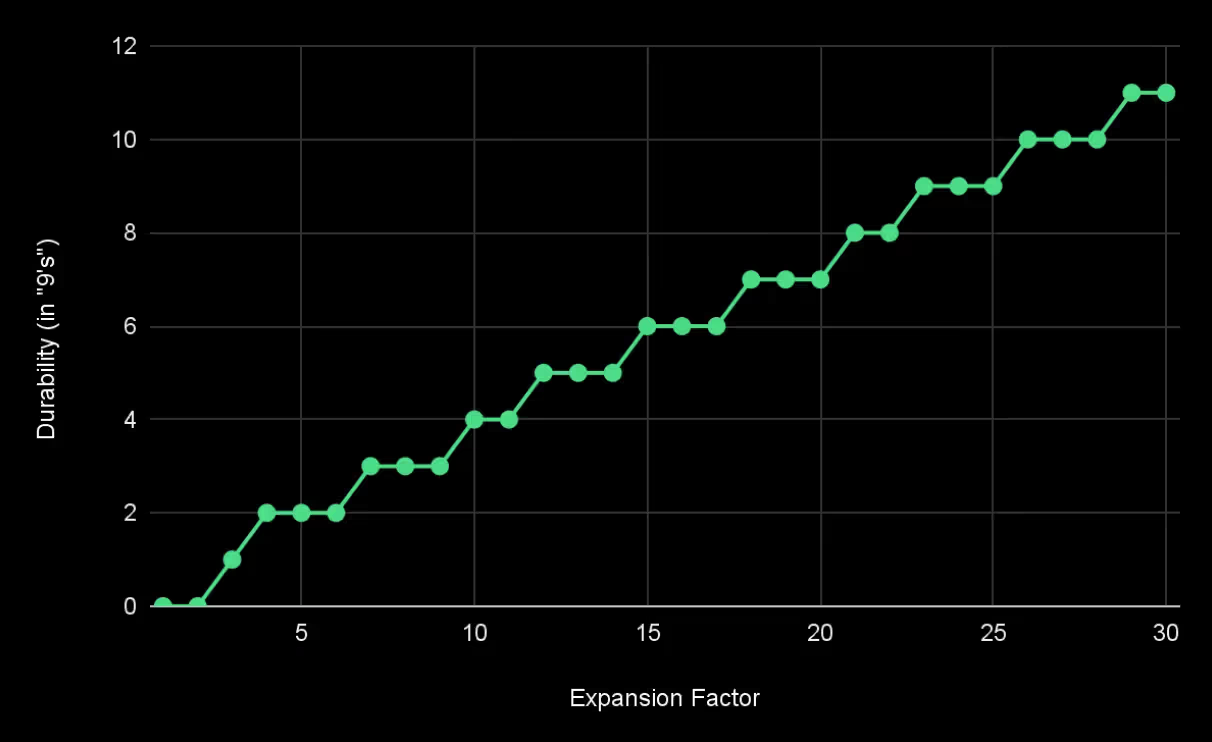

Replication stores an exact copy of the data on multiple nodes. Replication is very easy to implement, but it has a significant trade-off. It increases the network expansion factor linearly without providing substantial durability.

The chart above shows that to get to the same level of reliability as centralized providers using 1 to 1 replication, we have to replicate the data to 29 nodes. That means uploading, storing, and paying for 28 extra copies of data.

If the user wanted to upload 1GB of data with replication, that would be expanded to 29 GB. Using the estimated failure rate of 20%, the user would repair 6 GB of data every period. That’s a significant amount of overhead for a small amount of storage.

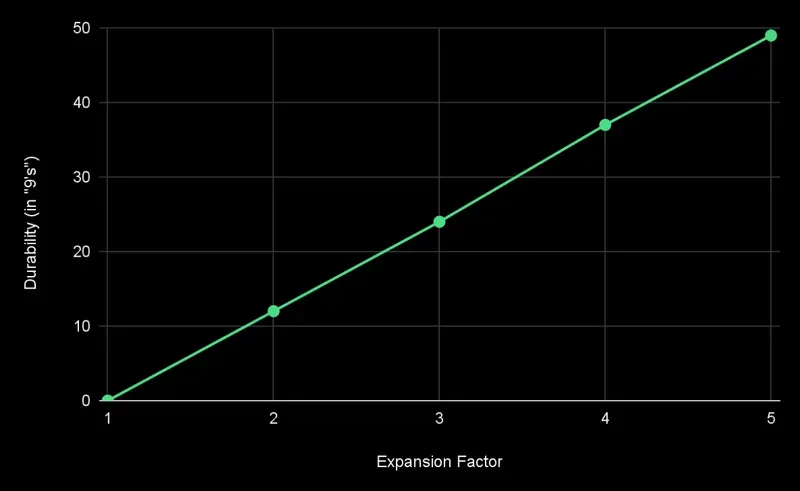

Sia has used erasure codes to ensure data durability since its launch in 2014. Erasure codes provide much better durability at significantly lower levels of expansion. When data is uploaded using erasure codes, it is split into m chunks, where any n chunks can recover the data, commonly referred to as n-of-m.

The erasure params are configurable on Sia, but the default is 10-of-30. At a high level, that means when data is uploaded, it’s split into 30 equal-sized chunks, with each provider receiving a single chunk. That gives us an expansion factor of only 3x. We can estimate the data’s durability as above, assuming a 20% node failure rate over the contract’s lifetime.

As you can see, erasure codes provide significantly higher data durability, exceeding centralized providers’ durability at just 3x expansion, saving significant bandwidth and storage costs. If the user wanted to upload 1GB of data with erasure codes, that would be expanded only to 3 GB and provide over 20 “9’s” of durability. If we take the estimated failure rate of 20%, the user would only be required to repair around 200MB. That is significantly better than replication.

What is the purpose of Blockchain?

In centralized systems, legal frameworks exist for two trusted parties to exchange currency for storage and bandwidth. When operating in a permissionless environment, you can’t assume a provider is trustworthy. This is where blockchain comes in. Sia has been running as a layer-one blockchain for nearly a decade now. Sia’s blockchain is very similar to Bitcoin’s and uses the UTXO model, with the specific addition of a smart contract for storage. Running a layer-one blockchain comes with lots of challenges. First and foremost, having interop with other ecosystems is much more complicated. While this has slowly been changing, integrating with Sia takes significantly more work than a token on Ethereum or Cardano. However, these trade-offs are worth being able to optimize Sia’s blockchain for its primary use case: storing and retrieving important data. That means storage proofs and contracts have low

overhead and, most importantly, low cost.

Sia’s smart contract serves three purposes. First, it tracks the data the renter has uploaded. Second, it is an open micro-payment channel that enables instant escrowed payments between two untrusted parties. Third is a slashing mechanism that automatically penalizes the host if it fails to prove that it is storing agreed-to data. Uploading data is almost instant; the host and renter sign a revision, and the data is immediately bound to the contract. These interactions happen automatically as part of Sia’s consensus and create a fast and efficient way for consumers to interact with storage providers.

Significant opportunities and challenges mark the journey toward decentralizing cloud storage. The advantages of decentralized storage systems include enhanced data availability, fault tolerance, and the potential for improved performance. Yet, these benefits come at the cost of increased complexity and the need for robust incentive structures to ensure providers engage in beneficial behaviors. Innovations like erasure coding and the strategic application of blockchain technology have emerged as vital tools in overcoming these hurdles, offering solutions for reliable data storage without the vulnerability to single points of failure inherent in traditional centralized systems.

As decentralized storage networks continue to evolve, the focus must remain on developing strategies that ensure data durability, privacy, and security while also maintaining an economically viable model for providers and users. Decentralized storage networks form the base layer in the shift towards a more resilient, efficient, and user-centric model of cloud storage.